For want of a nail, the shoe was lost; for want of a shoe, the horse was lost; For want of a horse, the rider was lost; for want of a rider, the message was lost; For want of a message the battle was lost; for want of a battle, the kingdom was lost . .

Thursday, September 12, 2013

Win 8/1/IE 11 - Cross Browser Testing, Device Testing and more cool features

Test Effectiveness, Test Efficiency, Defect Removal Efficiency (DRE) - Jargons :)

I was talking to a colleague today around difference among these test metrics and I am sharing it with you all if it helps :)

Saturday, August 17, 2013

Mob Testing (inspired by Mob Programming)

Testers & QA's,

There is something interesting that we might want to try. I came across an upcoming agile practice called Mob Programming. In gist, this takes pair programming to next level by coding as a team. The idea is simple that you can write the best code when one person is actually typing the code and rest of the team is providing inputs. This has helped teams deliver builds without bugs. If you think, you are developing, unit testing, reviewing and testing the code all-at-once. How cool is that?

Read about it @ http://mobprogramming.org/mob-programming-basics/

Driver/Navigators

We follow a “Driver/Navigator” style of work, which I originally learned from Llewellyn Falco as a pair programming technique. Llewellyn’s approach is different from any other I have been shown, have seen, or have read about (and I think he invented it or evolved into it.)In this “Driver/Navigator” pattern, the Navigator is doing the thinking about the direction we want to go, and then verbally describes and discusses the next steps of what the code must do. The Driver is translating the spoken English into code. In other words, all code written goes from the brain and mouth of the Navigator through the ears and hands of the Driver into the computer. If that doesn’t make sense, we’ll probably try to do a video about that or a more complete description sometime soon.

In our use of this pattern, there is one Driver, and the rest of the team joins in as Navigators and Researchers. One important benefit is that we are communicating and discussing our design to everyone on the team. Everyone stays involved and informed.

The main work is Navigators “thinking , describing, discussing, and steering” what we are designing/developing. The coding done by the Driver is simply the mechanics of getting actual code into the computer. The Driver is also often involved in the discussions, but her main job is to translate the ideas into code. Of course, being great at writing code is important and useful – as well as knowing the languages, IDE and tools, etc. – but the real work of software development is the problem solving, not the typing.

If the Driver is not highly skilled, the rest of the team will help by guiding the Driver in how to create the code – we often suggest things like keyboard short-cuts, language features, Clean Code practices, etc. This is a learning opportunity for the Driver, and we transfer knowledge quickly througout the team which quickly improves everyones coding skills.

I would suggest that if we apply the same to testing how productive it can be. I know exploratory testing is cool but what would be even better is that the entire test team gets into a room and one person takes the responsibility of "driver"- he will follow the instructions of the team and document/execute the test cases. Rest of the team will play "navigators" and suggest scenarios and different test condition. This will be a great demonstration of collective IQ and things like mails, meetings, triage calls can be eliminated to reduce unproductivity.

Let me know your thoughts and if you find this interesting, we can pilot this in one of your projects and evangelize it.

Tuesday, May 7, 2013

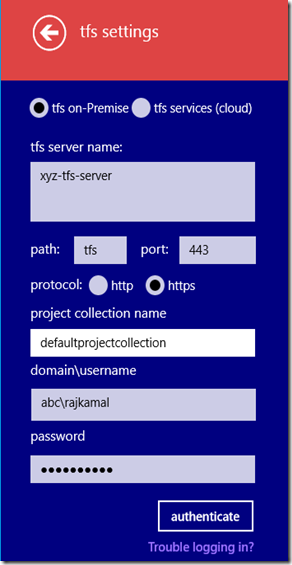

TFS Live Configuration - TFS Login Details

Enable Alternate Credentials for Hosted TFS / TFS Service

Step 3: Go to “Credentials” tab in “User Profile” window

Step 4: Select “Enable Alternate Credentials”

Step 5: Set a new password (Note: This can be same password as of your windows live id so easy to remember)

Friday, April 5, 2013

Dont be afraid to take up a project that's failing

Funny may it sound but things get worse but then they get better and the experience of turning a failure into success is unforgettable. You will learn things that you wont learn if you always play safe. A sailor who hasn't faced a storm is not really a true sailor. An escalated projects have high visibility and you have an amazing opportunity to fix things and you might be surprised in the end.

Testing in Production ?

I don't promote it but it does happen. We should let these developers know that they are living on edge with a time bomb ticking and its a matter of time before it explodes. Why take chance when you can test the code and then release it.

Testing - Recursive Features

Though my above comment on FB was on a lighter side, I feel that there should be testability around such things where we generally confine our thinking to just one level and leave it there.

Saga of Unsung Heroes

‘Tester Testifies’ is my tribute to all the testers in the world – the unsung heroes, who save millions of dollars by finding bugs and still stay unheard.

Saturday, March 23, 2013

Software “Test Confidence Report” [Test Sign-Off]

I would like to share something new that I learnt from one of my recent engagement. Thanks to Anand Prabhala, my Project Manager, who triggered these chain of thoughts by asking one simple question, “Raj, keeping all the bugs metrics and test executions reports aside, as a test lead, what is your ‘test confidence’ to release this application into UAT'”.

If you are in testing, I am sure you would have found yourself in similar situations many times before. That was a déjà vu moment and I found myself saying something like ‘well, it depends. I can’t tell you that as it’s very subjective and it totally depends on individual’s perception of quality and whatever I might say might be my own opinion not necessarily the opinion of my test team. You should look at my test execution report and bug metrics as true indicators’.

I knew that he had a point, Metrics are good but we should be able to convert them into something really meaningful and actionable to be able to take a decision with confidence and conviction. As a test lead, providing any number of test metrics is not enough, if you can’t take a confident decision by looking at it. That’s like just half the job done.

I thought it’s going to be a no-brainer. Call for a meeting and ask my test team to vote and we will know our test confidence. Funny though it may sound but you may get even more confused as confidence is highly subjective and can’t be arrived at by just doing a poll. Beside it won’t be fair as confidence is just like temperature which can fluctuate drastically based on circumstances, moods, emotions, pressure and state of mind on a given day.

Most of the times there is a strong correlation between tester’s confidence and metrics like failed test and active bugs but there can be exceptions. Imagine that there is a functionality that is working well but there are few eye spoilers that’s bothering her for quite some time and your test execution and bug report doesn’t get captured as alarming by the standard metrics like % of test cases passed, no .of critical/high severity bug etc. The test confidence could also be low if tester believes that end user is going to hate it and this must be fixed. We are saying lot of times test confidence can’t be used concluded as “high” by saying if 90 % test cases passed or if there are not s1 / s2 issues and that’s where we need to give weightage to tester’s feeling about the quality of that feature. On the contrary, we could also have scenarios where more test cases failed in some cases and metrics look terrible but we know that they are not coming in the way to do UAT testing and hence test confidence could be high or medium but not low.

Lot of times such stories doesn’t come out by just looking at plain numbers. Remember, now you are talking about an application that typically consist of myriad of features. Brain can’t be expected to accurately take into account all your test confidences against each feature and do an intelligent summation of test confidence for you. This will only get complicated when those features are owned by a team of testers and now test lead’s job get tougher to get test confidences from all the testers and decide on overall test confidence level of the team.

In reality, it might be just a matter of finding the real culprits that are bringing down your test confidence and targeting them and you will be surprised that those issues might get fixed very swiftly, once identified and prioritized but the trick is to identity them.

I thought why we don’t add subjectivity to objectivity as that’s was the missing ingredient. Let’s start measuring test confidence for each feature by looking at real metrics for that feature. We took the TFS out-of-the-box requirement traceability report and added just a simple field called” test confidence”. We got these test confidence indicators (high, medium, low) from testers, who were owning these feature and started assigning test confidence as high, medium or low. This is a marriage of test confidence and industry standard requirement traceability report J

Finally, you can just add the number. of features that had high confidence, medium confidence and low confidence and calculate %. E.g. Now I can say I am 87 % highly confident and 12 % medium confident and 1 % low confident.

Note: This way I am not saying I am confident or not. My test confidence is not a boolean value anymore.

We passed on this report to our developers to prioritize bug fixing with the aim to increase test confidence where it was low or medium. Now our development team didn’t really have to worry about lot of things like severity, priority, stack ranking, usability issues etc. We could make their life easy by giving a single indicator. This also helped our customers prioritize their testing and know the features that are not ready yet.

Do you want to use it in your next engagement? Let me know by posting your comments.

Friday, February 22, 2013

TFS Live - Windows 8 App

Note**: if you are trying with hosted TFS, please refer to slide 17 of this PPT for configuration change

http://apps.microsoft.com/windows/en-US/app/tfs-live/6af3a840-1dbd-47c3-8ec8-3f7c1e8ac6a9

Want to just try out and don't have TFS access?

- Enter “TFS Server” as https://ranjitgupta.visualstudio.com/defaultcollection

- Enter “domain\username or live id” as tfslive@outlook.com

- Enter “password” as nothing123$

TFS Live - Windows Phone 8

Note**: if you are trying with hosted TFS, please refer to slide 17 of this PPT for configuration change

Download Link for Windows Phone 8 (or search in Windows Phone Store for TFS Live)

- Enter “TFS Server” as https://ranjitgupta.visualstudio.com/defaultcollection

- Enter “domain\username or live id” as tfslive@outlook.com

- Enter “password” as nothing123$

Thursday, February 14, 2013

Coded UI Usability Automation using JavaScript

After downloading the extension, add the DLL to your references under your Coded UI Project. Please find below the sample documentation

#Coded UI Usability Automation using JavaScript

# Send your feedback to rankumar@microsoft.com / rajkamal@microsoft.com

Thursday, January 17, 2013

TFS Live - Privacy Policy

TFS Live - Privacy Policy:

![image_thumb[2] image_thumb[2]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj033991cjuXPxdV9lIYP4Szk74sClAkO8lduJjegBUdpcNFLjK5UyjJ6f3NVBRe3VbV3hQ_lvdfVgIQaRHHnDHfuEQMDIn0pixMNleb5cav-IezbN30nLhpl5ZBerP-GP7kSBlYDafCJU/?imgmax=800)

![image_thumb[4] image_thumb[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiKRYMMAkGTnwjtTqyKvEUd5Ka9ppZH-FssNJeLiFxcd5fpCnVLLmeyFM9anMRQWK36HwalRatofnWE2sUOgCw2tBhm4jPArydx_8oR9_Fvvy31A5FdVRSaxQ6pK35uaC06KXumFA1wcj4/?imgmax=800)

![image_thumb[7] image_thumb[7]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjTbkk4o__C2aHGY0GjBMLrv3hUm_QHDuoLAO9YdRtp8xCtJ4Co3ioCEP9IQJhNyAi2Q4CSyRj7DulLaOlRz7eevgoEFoLceL4ygWog2zsKnDxX4McrEGaLxrht-_0oalMLU2liSAbezVs/?imgmax=800)

![clip_image001[6] clip_image001[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgEfQmzPtWXAYWYvTklHBeQp4CF6f7CIaFkgwm4H98Vf5bRA5Gt1AlYBU6sbIzU9nXDcEFqOxrri_xiEmnnKmjZFHHiYq0dfohnzA7Xept8FZI58rFhdGy9T_ip1Yd_W-6Yk1vUgJ6HC_0/?imgmax=800)

![clip_image002[6] clip_image002[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEirw1nc7HO46678aW6sOAv3fDbERQ1wbKOJ2TJWu8KvEr043TTWvjRy5RxraKbJ6zrV2TxUkHzwToQQMtgLkivzY8BNvV1NNjqLJbwBR5WE2RsZ8zeZr-cbsrHjGI_Rf_WjfLBePACXYzE/?imgmax=800)